Look, let’s cut to the chase. AI is no longer coming—it’s here, radically transforming how we work. And make no mistake, this isn’t just for tech folks. Anyone can leverage AI today using simple language commands instead of code.

My team’s research confirms what I’ve seen firsthand: over 40% of U.S. work activity can be enhanced or completely reimagined with generative AI. Legal, banking, insurance, and capital markets will feel this shift most intensely, with retail, travel, healthcare, and energy close behind.

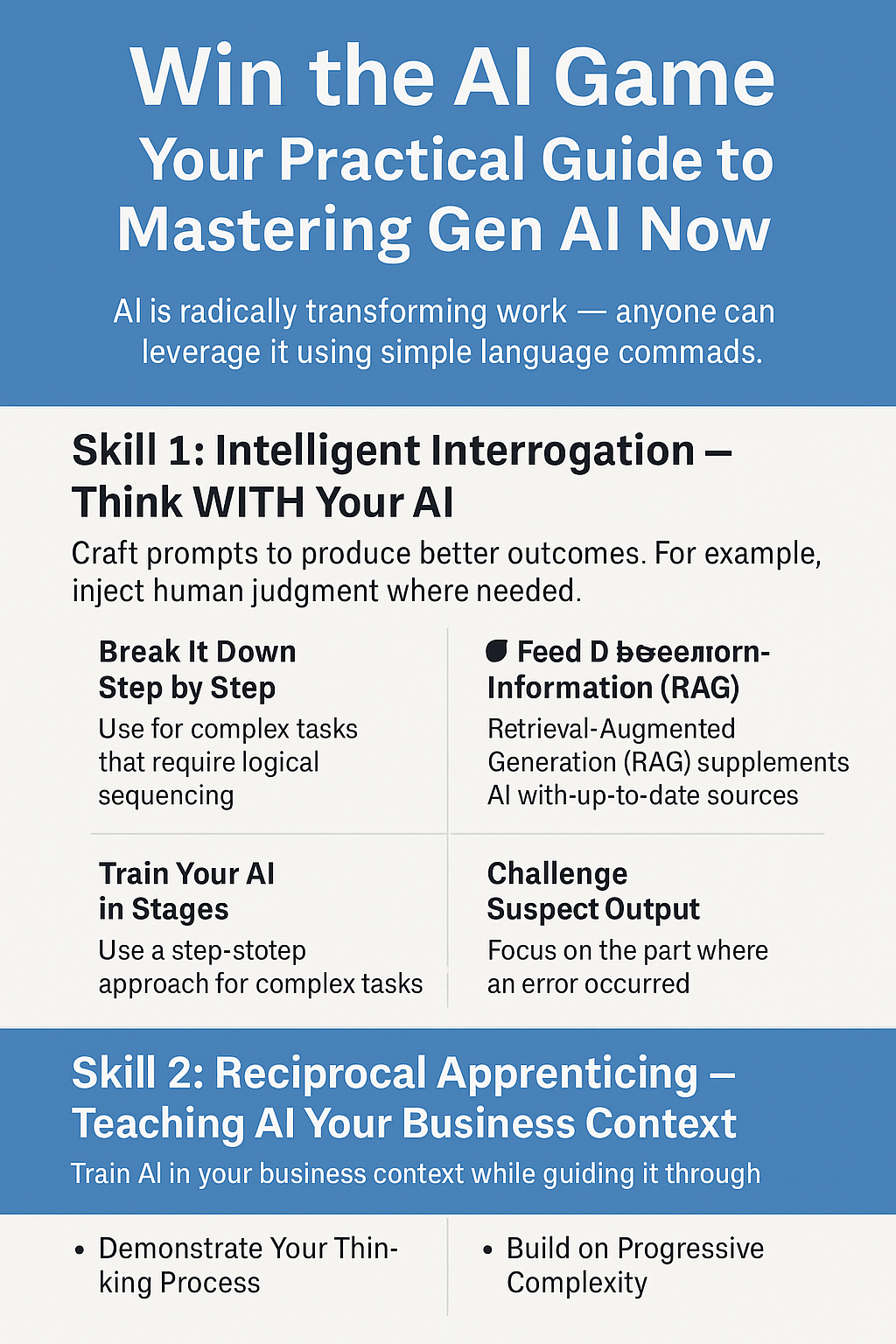

Here’s the reality check: your future success hinges on how effectively you partner with large language models like ChatGPT. I’ve been in the trenches with teams implementing these technologies, and I can tell you that three critical “fusion skills” separate those who thrive from those who merely survive.

Skill 1: Intelligent Interrogation – Think WITH Your AI

I meet too many professionals who treat AI like a search engine, tossing basic queries at it and expecting magic. That’s leaving serious value on the table. Intelligent interrogation means crafting prompts that produce genuinely better outcomes—it’s about thinking with AI, not just asking it questions.

Let me share three approaches that actually work:

Break It Down, Step by Step

Remember how scientific management transformed manufacturing? We need that same rigor with AI prompting. Break down your process into clear steps and optimize each one.

Try this right now: Add the simple phrase “Let’s think step by step” to your next complex prompt. Research shows this can boost accuracy more than threefold across various tasks. Here’s a real example I used with my finance team last week:

My department has a budget of $500,000. We’ve spent 20% on equipment and allocated 30% for a new hire. We just received a budget increase of $50,000. What is our remaining budget? Let’s think step by step.

The AI responded: “Initially, your department had $500,000. You spent 20%, or $100,000, on equipment, leaving $400,000. You allocate 30%, or $150,000, for a new hire, which brings the budget down to $250,000. Finally, you recently received a budget increase of $50,000. Your remaining budget is $300,000.”

This creates a traceable reasoning chain I can verify rather than a black-box answer. For complex problems—like optimizing a sales rep’s travel route across multiple cities—this approach is invaluable.

Train Your AI in Stages

When I worked with a pharmaceutical client on R&D processes, we realized their domain expertise was too complex to dump into a single prompt. Instead, we introduced the AI to their work gradually, in distinct stages.

MIT researchers proved this works. They successfully trained ChatGPT to understand DNA structural biophysics by breaking that complicated task into sequential subtasks. For your inventory management challenges, try this prompt structure:

I want to optimize our inventory management process using a step-by-step approach. Let’s break this down into stages:

- First, analyze our historical demand patterns using this data: [INSERT YOUR DATA]

- Based on that analysis, what inventory levels should we maintain?

- Now, project our reorder points considering our current supplier lead times of [X] days.

- Calculate optimal order quantities given our storage constraints of [Y] cubic feet.

- Finally, evaluate how this approach would have performed against last quarter’s actual results.

Let’s start with step 1, then proceed through each stage in order.

Explore Multiple Paths

Business isn’t linear, and neither should your AI exploration be. For strategy design, product development, or any creative process, guide your AI to visualize multiple potential solutions.

I recently worked with a CPG marketing team that supercharged their forecasting accuracy by 43% using this technique. They primed their AI prompts to function as “superforecasters”—assigning probabilities to possible outcomes and arguing both for and against each one.

Try this approach:

I’m developing our go-to-market strategy for [product]. Rather than seeking a single answer, I want to explore multiple approaches:

- Generate three distinct strategy options that could achieve our goal of [specific objective].

- For each strategy, provide:

– A probability of success (%)

– Key strengths and advantages

– Potential weaknesses and risks

– Resource requirements

- Identify the conditions under which each approach would be optimal.

- Suggest how we might combine elements from different approaches.

Let’s think expansively rather than converging too quickly.

Skill 2: Judgment Integration – Because Machines Still Need Human Wisdom

Let’s be real: AI can hallucinate, contains outdated information, and lacks the ethical grounding and contextual understanding that you bring to the table. Judgment integration means knowing where and how to inject your human discernment into the process.

Here are three approaches I’ve seen work consistently:

Feed It Better Information (RAG)

I was working with a healthcare provider whose AI kept generating outdated treatment recommendations. The fix? Retrieval Augmented Generation (RAG).

RAG supplements an LLM’s training with information from authoritative knowledge bases. This prevents a lot of the misinformation and outdated responses that plague off-the-shelf tools.

Here’s a practical prompt template I’ve used to leverage RAG effectively:

I need accurate information about [topic] that includes the latest developments as of [current date].

Before answering, please consider:

- Any data or research published in the last [X months/years]

- Information from these specific authoritative sources: [list trusted sources]

- Current regulatory guidelines from [relevant authorities]

If you’re uncertain about any information, please indicate this clearly rather than making assumptions.

Note: You’ll likely need your IT team to properly implement RAG in your workflows, but this prompt approach helps maximize its value.

Guard Your Data and Watch for Bias

Here’s something I learned the hard way: if you’re using confidential data, only use company-approved AI models behind your firewall. Never paste sensitive information into public LLMs.

I also regularly catch myself embedding subtle biases into my prompts. Recently, I asked an LLM to explain how a quarterly report signaled a five-year growth trajectory—classic recency bias in action. I was overweighting the most recent information when predicting future events.

Try this prompt approach to minimize bias:

I want to analyze [topic/data/situation] while consciously avoiding common cognitive biases. Please help me consider:

- Multiple time horizons (not just recent events)

- Contradictory evidence that challenges my initial hypothesis

- Base rates and broader context beyond my immediate experience

- Alternative explanations for the patterns I’m seeing

My initial perspective is [your view], but I want to ensure I’m considering this objectively.

Challenge Suspect Output

When you see questionable AI output, your instinct might be to say “try again” repeatedly. Don’t. UC Berkeley researchers found this actually degrades response quality over time.

Instead, identify exactly where the error occurred and have a separate LLM tackle just that part. I’ve used this technique with product development teams to troubleshoot complex calculations.

Try this approach when you spot an error:

I notice an error in your reasoning at step [X]. Instead of redoing the entire analysis, let’s focus specifically on this part:

- The problematic step is: [paste the specific section]

- I believe the issue is: [explain what seems wrong]

Please break this specific step down into smaller sub-problems and explain your reasoning for each one. Once we correct this step, we’ll integrate it back into the full analysis.

Skill 3: Reciprocal Apprenticing – Teaching AI Your Business Context

This is where the magic happens. Reciprocal apprenticing means training AI on your specific business context while simultaneously learning how to guide it through increasingly complex challenges.

I’ve implemented this with teams across industries, and here are three approaches that deliver results:

Demonstrate Your Thinking Process

Before giving an LLM a complex problem, prime it with examples of how you want it to think. The “least-to-most” approach—breaking down complex challenges into progressive subtasks—is particularly powerful. Google DeepMind research shows this can boost accuracy from a dismal 16% to an impressive 99%.

Here’s a practical template I used with a retail marketing team:

I want you to approach this marketing challenge using a “least-to-most” reasoning method, working from simpler questions to more complex ones. Here’s how I want you to think through launching our new [product]:

- START WITH AUDIENCE: Who are our potential customers for this product? Consider demographics, behaviors, and needs.

- THEN DEVELOP MESSAGING: Based on the audience identified, what key messages would resonate with them? Focus on performance, features, and emotional benefits.

- NEXT DETERMINE CHANNELS: Given our audience and messaging, which channels would most effectively reach them? Consider digital, traditional, and partnership opportunities.

- FINALLY ALLOCATE RESOURCES: How should we distribute our $[X] budget across these channels for maximum impact?

Let’s solve each component in this specific order, using the answer from each step to inform the next.

Teach Through Examples

One of the most effective techniques I’ve implemented is “in-context learning”—teaching AI by showing examples within your prompts. This approach lets you customize pre-trained models without technical complexity.

Medical researchers found that LLMs shown examples of medical summaries produced results equal or superior to human-generated summaries 81% of the time. I’ve applied similar techniques with sales teams to dramatic effect.

Try this prompt structure:

I want to train you to help with [specific task] in our unique business context. Let me show you several examples of how we approach this:

EXAMPLE 1:

Input: [specific business scenario]

Our approach: [detailed explanation of how your team handles this]

Outcome: [results achieved]

EXAMPLE 2:

Input: [different scenario]

Our approach: [different approach]

Outcome: [results]

EXAMPLE 3:

[continue pattern]

Now, I’d like you to apply this same approach to the following new situation:

[describe current challenge]

Build on Progressive Complexity

The real power of reciprocal apprenticing comes when you progressively increase complexity. I’ve worked with sales teams who started with basic data analysis and gradually built toward sophisticated customer segmentation models.

Consider two software companies I advised, both looking to boost sales. The first company’s sales leader began by providing historical data and asking about quarterly demand forecasts. Then he added information on customer software upgrades and annual budgets, asking about seasonality effects. Finally, he incorporated CRM statistics and marketing reports, exploring campaign impact on sales.

Try this progressive approach:

I want to build a model of our [business process] step by step, each time adding more complexity.

ROUND 1 – BASIC FRAMEWORK:

Here’s our foundational data: [insert simplest version of relevant data]

Question: What initial patterns or insights can you identify?

ROUND 2 – ADDING CONTEXT:

Now let’s incorporate these additional factors: [insert next layer of complexity]

Question: How does this new information refine your understanding?

ROUND 3 – ADVANCED ANALYSIS:

Finally, let’s integrate these complex elements: [insert most sophisticated data]

Question: Given everything we’ve discussed, what comprehensive recommendations can you make?

As we progress, I’ll provide feedback on what works for our specific business context.

Now What? Getting Started Today

Here’s the hard truth: waiting for your organization to train you on these skills is probably a losing strategy. Our 2024 survey of 7,000 professionals revealed that while 94% were ready to learn gen AI skills, only 5% reported their employers were actively training their workforces at scale.

You need to take matters into your own hands. I’ve seen countless professionals transform their careers by proactively developing these capabilities while their peers waited for formal training.

Start simple:

- Take an online course from Coursera, Udacity, or university programs like those at UT Austin, ASU, or Vanderbilt.

- Experiment with the prompting techniques I’ve shared using your own business challenges.

- Advocate for opportunities to integrate these tools into your workflow.

What’s coming next? You’ll need skills for chain-of-thought prompting with multimodal large language models (MLLMs) that handle text, audio, video, and images simultaneously. Research shows the right prompting can double their performance.

Bottom line: this isn’t a technology wave you can afford to watch from the shore. No major innovation in history has spread this fast or will transform knowledge work so profoundly. The future won’t be built by AI alone—it will be shaped by people like you who master these fusion skills and apply them thoughtfully to real business challenges.

What are you waiting for? The game has already started.